I would like to quickly follow up on my previous post on explicitly collaborative information seeking. My claim in that post was that, despite the shared terminology, a service like Aardvark (or Twitter) is not truly collaborative.

Let me be clear about Aardvark: What that service does is help you comb through a network of people to find those individuals who have the highest likelihood of holding the answer to your information need. Somebody has the answer; you just don’t know who it is. So Aardvark helps you find that somebody. The reason this is different from what I am talking about with explicit collaboration is that in this latter case, you already know who it is that you want to work with on resolving a shared information need. You want to work with a relationship partner on finding an apartment. You want to work with a business colleague on finding potential markets for a new product. You want to work with some buddies on planning a road trip. In all of these situations, your partner, your colleague, and your buddies don’t already have the answers that you seek. But you do know that you want to work with them to find those answers because they have the same need that you do. Your partner wants to live with you, your business colleague wants to work with you, and your buddies want to travel with you. This is what explicitly collaborative information seeking is about, and it’s not the same thing as the “collaborative” category discussed in the panel.

Case in point: Take a look at the panel’s slides: http://www.slideshare.net/bmevans/introductory-slides. Slide 9 outlines the two main social strategies: (1) Ask the network, and (2) embark alone. This misses a third major, but as yet untapped, strategy: (3) embark together.

A good way to think about this is in terms of information seeking. In both the (1) ask the network and (2) embark alone strategies, there is only a single user with an actual information need, a single person who is actively seeking information. Using Aardvark, he or she is asking other people in the network if they are able to give an answer to satisfy that need. But those other individuals do not actively share your information need. They already either (1) have the information that you seek, and thus already have a satisfied information need, or (2) do not have the information you seek, but do not care, i.e. they do not share your information need (they aren’t going to move in with you, or go on that road trip with you). When you ask the network, you are not actually involved in collaborative information seeking. There is only a single seeker: You. You are simply tapping into the network to find those people who already have the information you need. It is still the single individual, not the network, that has the information need and that is actively engaged in the seeking process.

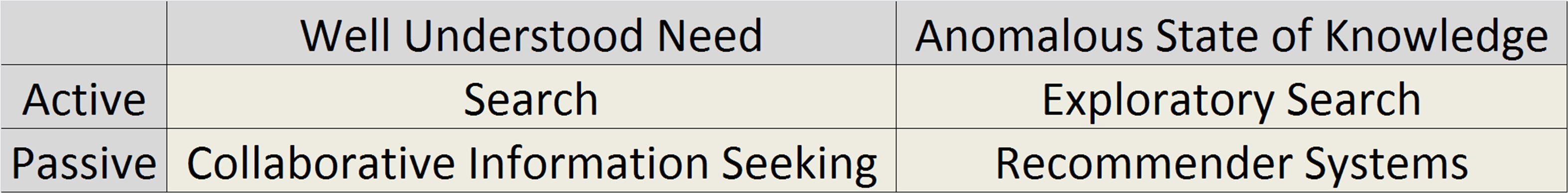

But embarking together with one or two other individuals who also lack information, i.e. engaging in explicitly collaborative information seeking, is a entirely different process. In this case, there are at least two information seekers, two people who have a shared, as-yet-unsatisfied, information need. Now, there are a number of different ways you can build systems and design interfaces to support these multiple seekers in their task. I’ve written a lot about such systems on this blog and on the FXPAL blog, and will not go into it in further detail right now. The point is simply that embarking together is an information seeking strategy that was not covered by any of the existing methods. It is not the same as asking the network. It is not the same as embarking alone. It is a third process, a third strategy, and one that remains quite untapped in today’s marketplace.

Update: I have a final quick example. On his live blog, Danny Sullivan paraphrases Max from Aardvark: “We want to do that across communication channels, so you can find partners to go bike with”. That’s Aardvarkian social search: You want to find the people to go biking with. Collaborative search is the next phase. Once you’ve found the people that you explicitly know that you want to go biking with, how do you find out where you want to go? You know about all the bike trails around your house. Your new biking partner knows about all the trails near her house. But neither of you know about the trails that exist halfway between both of your houses. Ideally, you’d like to find one of those trails that is good for both of you, because neither of you is aware of them. (And why should you have been? Before meeting your partner, you had no reason to venture away from your favorite nearby trails.) THAT is explicitly collaborative information seeking. When both of you actively look for new bike trails, that is embarking together.